AI Changes How Software Gets Written. It Doesn’t Change Who’s Accountable.

AI has dramatically reduced the effort required to produce software.

What it hasn’t reduced is responsibility.

As AI becomes part of the development process, organizations face a subtle but important shift. Software can be created faster than ever before, but the cost of misunderstanding, oversight, and poor decisions has not gone down. In many cases, it has gone up.

The central question is no longer “Can we build this?”

It’s “Can we trust what we’re approving?”

Speed has increased. Risk hasn’t gone away.

AI excels at accelerating known work. It can generate large amounts of code quickly, follow established patterns, and produce output that looks polished and complete.

From an organizational perspective, this increases velocity. And velocity is valuable.

But velocity also magnifies the impact of mistakes. When software moves faster through a system, problems surface later, under more pressure, and at higher cost if review and oversight don’t scale with it.

AI doesn’t eliminate risk.

It changes where it accumulates.

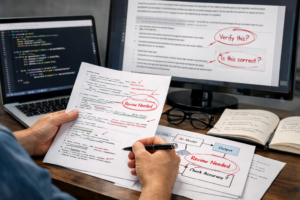

Why AI-generated code is easy to approve too quickly

One of the most challenging aspects of AI-generated code is that it often looks correct.

It runs. It passes basic tests. It aligns with familiar structures. On the surface, there’s little reason to slow it down.

The problem is that appearance is not the same as correctness.

AI has no understanding of business context, historical constraints, contractual obligations, or the real-world consequences of failure. It optimizes for plausible output, not defensible decisions.

That means errors tend to show up not in obvious failures, but in less common situations. Scenarios that are valid, but weren’t anticipated. These are the moments when systems are challenged and organizations are judged.

AI doesn’t understand your system or its consequences

AI has no awareness of:

- Why a system exists

- Which tradeoffs were deliberate

- Where tolerance for failure ends

- Which assumptions are legally, financially, or operationally significant

It doesn’t know which parts of a system are fragile or which decisions were made to satisfy non-technical constraints.

As a result, AI can produce code that is syntactically correct but contextually wrong. And context is where most real-world failures occur.

Verification and review matter more, not less

A common misconception is that AI lowers the bar for experience. In reality, it raises it.

As the cost of producing code drops, the most important work shifts to evaluation, review, and judgment. Someone still needs to understand what the system is doing, why it behaves the way it does, and how it will fail.

Organizations that treat AI as a shortcut tend to accumulate invisible risk. Organizations that treat it as an accelerator for experienced teams gain efficiency without losing control.

The difference is not tooling.

It’s discipline.

Accountability doesn’t move just because AI is involved

When a system fails, the questions are predictable:

- Why did this happen?

- Who approved it?

- How will we prevent it next time?

AI does not answer those questions.

Responsibility still sits with the organization. With leadership. With the teams making and approving decisions.

AI changes how software is produced.

It does not change who is accountable for the outcome.

What effective organizations are doing differently

Organizations that use AI effectively don’t treat it as an authority. They treat it as a drafting and exploration tool.

They:

- Increase review rigor, not reduce it

- Rely more heavily on experienced oversight

- Maintain clear standards and ownership

- Prioritize explainability over speed

In these environments, AI becomes leverage.

Without those controls, it becomes exposure.

What this means in practice

AI will continue to reshape software development. Ignoring it isn’t realistic, and neither is assuming it will replace human judgment.

The organizations that benefit most will be the ones that recognize where AI helps and where responsibility still requires experienced people making deliberate decisions.

AI can help produce software faster.

It can’t decide what’s acceptable risk.

That still requires people who understand the system and are willing to own the outcome.

The organizations that benefit most from AI are the ones that treat it as a tool inside a larger system of review, ownership, and responsibility — the same principles that guide how we approach software and consulting work.